network

Our network includes the world’s leading minds and institutions

Forum AI’s network includes former cabinet officials, Fortune 500 executives, leading researchers, and analysts who move markets.

Apply to the network

Apply to the network

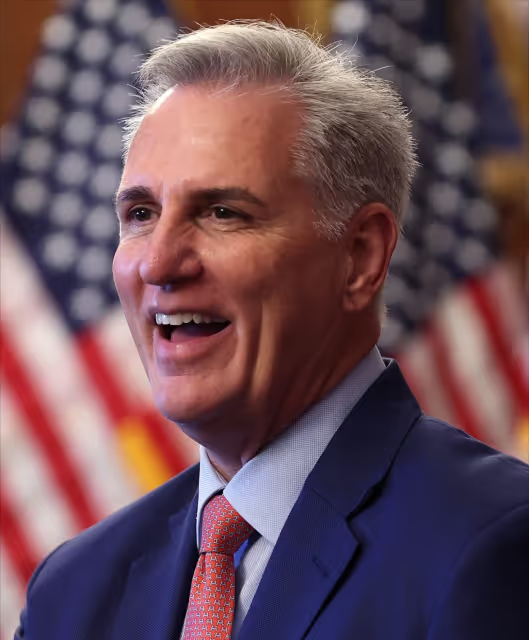

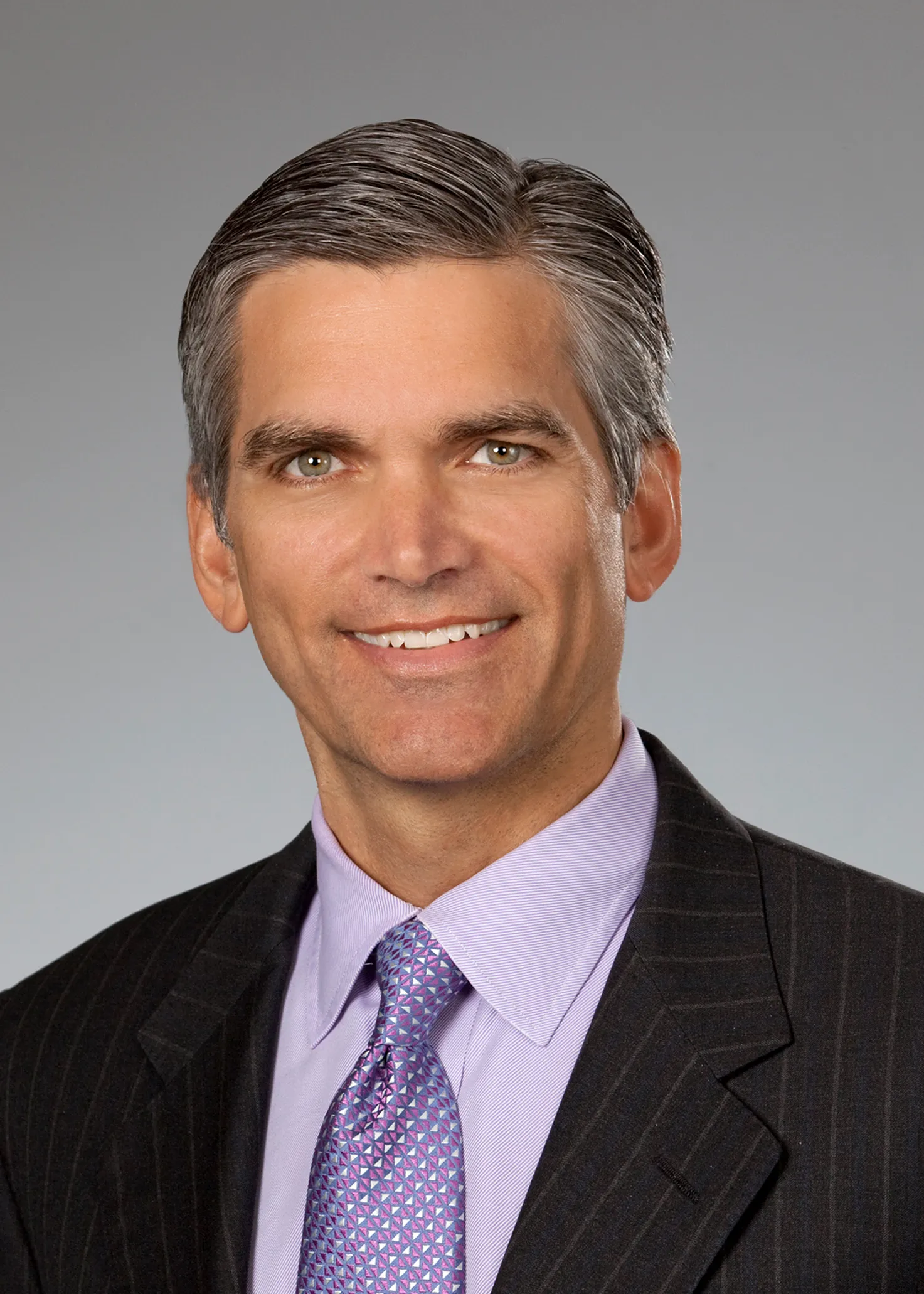

Kevin McCarthy

Former Speaker of the House

Anne Neuberger

Former Deputy National Security Advisor

.png)

Sir Niall Ferguson

Historian, Author, Senior Fellow Hoover Institute, Stanford

Antony Blinken

Former United States Secretary of State

Scott Jennings

Republican political strategist

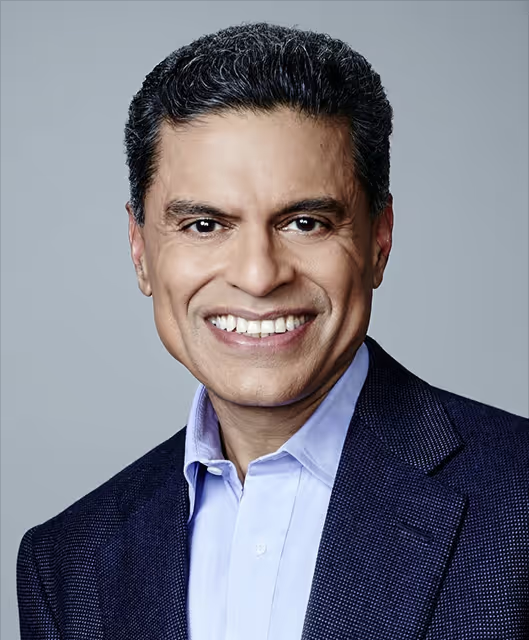

Fareed Zakaria

Author and host, CNN

.png)

Salena Zito

Author and journalist

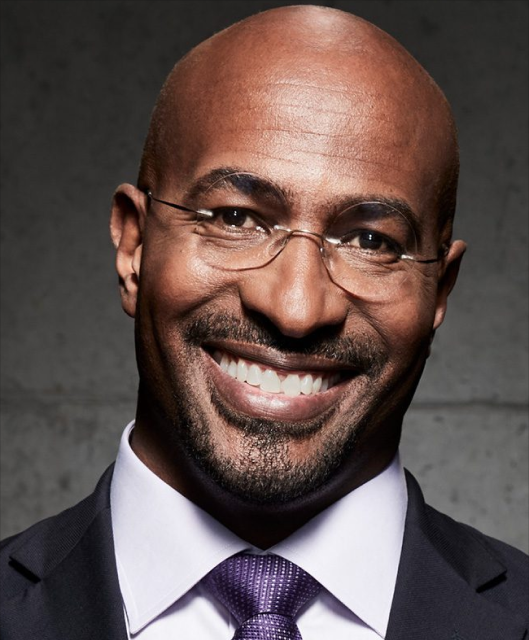

Van Jones

CNN Host, Founder WorkBetterTogether.ai

Ory Rinat

Former White House Chief Digital Officer

Iván Duque Márquez

Former President of Colombia

Dr. Yen Pottinger

Infectious disease specialist

Vuk Jeremić

Former President, UN General Assembly

Richard Goldberg

Former Trump administration foreign policy advisor

Kristen Soltis Anderson

Republican pollster

Avik Roy

Former advisor to Secretary of State Marco Rubio

Sebastian Kurz

Former Chancellor of Austria

Elizabeth Economy

Senior Fellow, Hoover Institute

Eliana Johnson

Editor-in-Chief, The Washington Free Beacon

.png)

Reihan Salam

President, Manhattan Institute for Policy Research

.png)

Diogo Mónica

Partner, Haun Ventures

Ian Bremmer

Founder and President, Eurasia Group

Oscar Munoz

Former CEO United Airlines

Jackie Reses

Chair and CEO Lead Bank

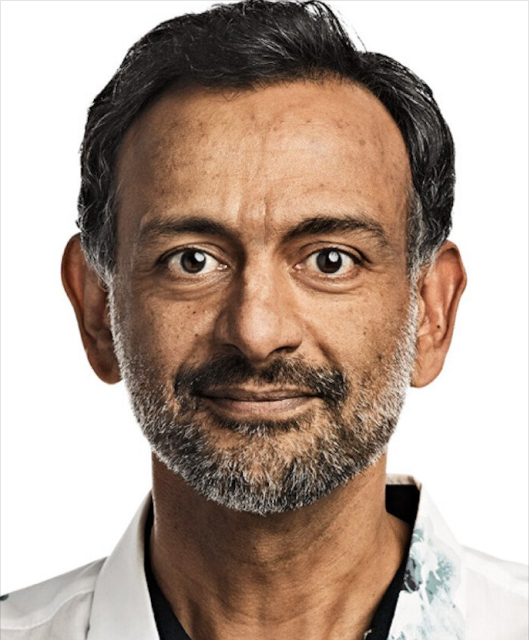

Dr. Jordan Shlain

Physician, Private Medical

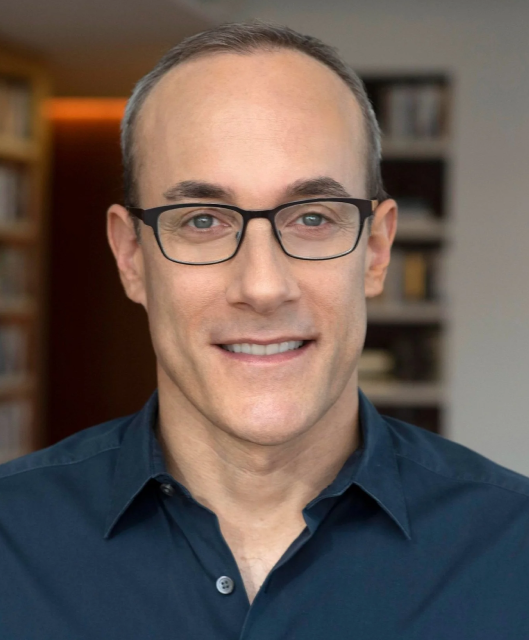

Rob Reich

Senior Fellow, Stanford Institute for Human-Centered AI

Arianna Huffington

Founder and CEO, Thrive Global

Glenn Hubbard

Former Chair, White House Council of Economic Advisors

Poppy Harlow

Former business anchor, CNN

Dan Senor

Host, "Call Me Back" podcast; Author, Start Up Nation

Jason Bordoff

Founding Director, Center on Global Energy Policy at Columbia University SIPA

Grant Harris

Former Assistant Secretary of Commerce

Sebastian Mallaby

Senior Fellow for International Economics, Council on Foreign Relations

Dr. Samantha Boardman

Professor of Psychiatry, Cornell

Jonathan Levin

Founder, Chainalysis

Bethany McLean

Author, Enron: The Smartest Guys in the Room

Paul Grewal

CLO, Coinbase

Benedict Evans

Tech investor, analyst

Alex Kantrowitz

Host, "Big Tech" podcast

Henning Beck

Neuroscientist

Michael R. Strain

Director of Economic Policy Studies, American Enterprise Institute

Rob Kee

Former Assistant Director, CIA

Russell Wald

Executive Director, Stanford HAI

Paul Root Wolpe

Director, Emory Center for Ethics

Brian Morgenstern

Former White House Deputy Press Secretary

Boaz Weinstein

Founder & CIO, Saba Capital

Bill Simon

Former President & CEO, Walmart U.S.

Dan Ives

Tech Analyst, Wedbush

Gene Munster

Co-founder, Deepwater Asset Management

Tad Smith

Former CEO, Sotheby’s

Dr. Tony Pan

Co-founder & CEO, Modern Hydrogen

David Aronoff

General Partner, Flybridge

Ben Lamm

Co-founder & CEO, Colossal Biosciences

Raphael Ouzan

Founder & CEO, A.Team

.avif)

%201.png)

.png)

.png)

.png)